Sound Synthesis and Physical Modelling

As soon as digital computers had sufficient computing capability, scientists and musicians devoted time and effort to synthesise sounds algorithmically. Starting from the research of Max Mathews at Bell Labs in the late 1950s [1,2], a wealth of synthesis techniques was invented: these included wavetable, additive and modulation synthesis [3,4]. They can be described as abstract, i.e. producing synthetic sound without an acoustic referent—and as a result, sound quality is invariably unnatural. Physical modelling synthesis came into existence as a response to the limitations in sound quality inherent in such abstract synthesis techniques. Physical modelling allows the user the ability to produce the sounds of common acoustic instruments without the need for samples, and to control the output by changing a few, musically intuitive parameters (geometry, forcing, input location, material properties, playing gestures, etc.). This is an intuitively appealing, yet challenging approach to sound synthesis: the waveform, in this case, is produced algorithmically, through the numerical solution of the dynamical system underlying a given instrument, whether it is a piano, or a violin. Such a system may always be described by a coupled system of time-dependent partial differential equations (PDEs) [5]. (Interestingly, the very first theories of partial differential equations describing wave phenomena came out of the mid-1700s as a response to a musical problem, that of the vibrating string. A heated debate sparked amongst some of the greatest mathematicians of the time: Jean d’Alembert, Leonhard Euler, and Daniel Bernoulli. The controversy was partially solved by Lagrange and, more conclusively, by Fourier 50 years later [6].) Physical modelling techniques can be contrasted with sampling methods in that it is not the sound of an acoustic object which informs the digital copy (as is the case for sampling), but it is the digital copy which informs and indeed generates the sound. Modelling here has a precise meaning: it refers to the mathematical description of sound production in a musical instrument.

Physical modelling synthesis first came to prominence in the 1980s, when various frameworks emerged. Two in particular can be viewed as milestones: digital waveguides and modal methods. Digital waveguides were developed primarily by Julius Smith and his team at Stanford, making use of efficient computational structures such as delay lines able to simulate wave propagation along uniform linear objects such as strings or acoustic tubes [7]. Modal methods, based on Fourier theory, were heavily investigated by researchers at IRCAM, Paris, with the aim of producing a large set of playable instruments under a common framework, such as Modalys or MOSAIC [8,9]. Modal methods rely on the decomposition of the system under study onto a series of modes, each of which is associated with a particular frequency of oscillation. Both types of synthesis can be viewed as simulations of an underlying instrument model.

The success of these early techniques was directly related to the possibility of achieving real-time synthesis (mandatory for any commercial implementation) but the range of musical instruments to which such techniques may be applied was limited: waveguides are not efficient for modelling systems in two or three dimensions, and modal decomposition are best suited for linear systems.

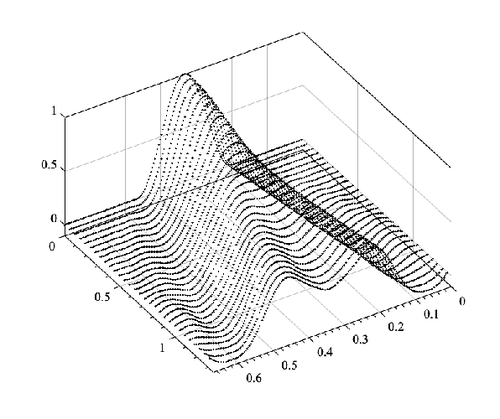

In the meantime, some researchers had relaxed the constraint of real-time performance and began rethinking physical modelling sound synthesis as an application of mainstream numerical methods; particularly direct time-stepping methods such as, e.g., the finite difference time domain method (FDTD). These methods had been introduced to treat problems in electromagnetics [10], but they had soon been extended to include a large number of vibrating systems [11].

In the early days, researchers were primarily interested in understanding the behaviour of musical instruments, rather than real-time sound synthesis as such. After pioneering work in the 1970s and 1980s (see the works by Ruiz [12], Bacon and Bowsher [13], Boutillon [14]) such work began in earnest in the 1990s primarily due to Chaigne and associates [15-17]. Since these early developments, FDTD methods have been increasingly applied to various systems (strings, bars, plates, acoustic tubes, etc.), leading to synthesis for systems which were out-of-reach using the previously mentioned earlier techniques [18].

In parallel with the problem of simulation, the question of control soon became prominent. Early experimentation in control can be traced back to the late 1980s, when researchers from Association pour la Création et Recherche dans les Outils d’Expression (ACROE) in Grenoble, France, began experimenting with transducers and virtual bows within the CORDIS-ANIMA real-time framework (a network of masses and springs) [19-21]. Later, Nichols created vBow, a mechanical bow with various degrees of freedom equipped with encoders and actuators. The encoders sent messages to a digital waveguide model of a violin, to control pressure, velocity, and bow rotation [22,23]. The software instrument, on the other hand, sent output messages to the actuators in order to produce a tactile feedback on the bow. The MIKEY project [24] developed a rudimentary framework to control various instruments (grand piano, harpsichord, etc.) using the same keyboard (the actuators would produce different stimuli according tothe different instruments.) Cymatic was a real-time physical modelling engine, similar to CORDIS-ANIMA in that it made use of point masses connected by springs to create sound. Cymatic, developed by David Howard and associates, could be controlled bymeans of a joystick and a mouse with a force feedback to improve realism [25,26].

Current directions in physical modelling synthesis.

Today, the frontiers of physical modelling extend far beyond academia and embrace fields outside of musical acoustics: a quick survey of commercial music software branded as physical modelling is an evident proof of the growing appeal of such techniques in the general public, see e.g. [27]. Commercial applications have appeared hand-in-hand with a growing body of academic work but have not yet reached maturity: many current applications are well out-of-reach even on modern consumer hardware [28].

The PhD work of Chabassier, represents the most complete reference on piano modelling available today. A numerical model of a Steinway model D grand piano has been constructed using advanced finite-element discretisation of the strings, piano soundboard and the surrounding acoustic field. The large resulting numerical simulation was run over a dedicated distributed computing facility, as the code requires several hours of computation per second of output. See e.g. [29-31]. In the wake of this research, Chaigne has continued investigating the problem of piano acoustics, with the aim of understanding how design changes have contributed to shaping modern piano tones [32,33]. Five historic pianos from the Stein-Streicher dynasty in Vienna have been modelled and studied. Results show the importance of the thickness of the piano, in relation to increased string tension for higher energy transfers, see e.g. [34-36]. The design of modern piano soundboards is the subject of the MAESSTRO project, shared between various French laboratories [37]. A model of harpsichord employing digital waveguides and samples was developed by Välimäki and associates [38].

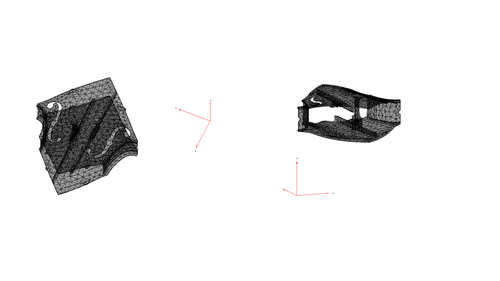

Bowed string simulation was the subject of the work of Desvages and Bilbao [39]. The recent purpose-built Musical Acoustics Lab in Cremona, Italy,

is currently investigating the acoustics of bowed instruments. The lab works in close connection with the Museum of the Violin, that includes a large collection of instruments from the Stradivarius era. The lab is equipped with a range of scientific measurement equipment in order to investigate problems such as the radiance of violins under playing conditions. Numerical approaches have also been explored, for the dynamic analysis of top and back plates [40-43]. Esteban Maestre [43] has implemented digital waveguides along with a radiativity model for real- time sound synthesis of the violin.

Outside of stringed instruments, physics-based simulations constitute an important field of research in computer graphics, see e.g. the work of Chadwick et.al. on shell sounds [44]; the review by Müller et.al. [45]; and the work by Schweickart et.al. on animating elastic rods [46]. The upcoming Marie Curie-funded project VRACE [47] focuses on the problem of audio in virtual and augmented realities. Being an ITN, it includes a large network of universities and industrial partners and aims at providing physically correct and perceptually convincing soundscapes in virtual reality, including physically modelled rendered sound.

[1] C. Roads. The Computer Music Tutorial. MIT Press, Cambridge, USA, 1996.

[2] M. Mathews and J. Miller. Music IV Programmer’s manual. Bell Telephone Laboratories, Murray Hill, USA, 1963.

[3] J-C. Risset. Catalog of computer synthesised sound. Bell Telephone Laboratories, Murray Hill, USA, 1969.

[4] J. Chowning. The synthesis of complex audio spectra by means of frequency modulation. J Acoust Eng Soc, 21(7):526–534, 1973.

[5] S. Bilbao. Numerical Sound Synthesis: Finite Difference Schemes and Simulation in Musical Acoustics. Wiley, Chichester, UK, 2009.

[6] G. F. Wheeler and W. P. Crummett. The vibrating string controversy. Am J Phys, 55(1):33–37, 1987.

[7] J. Smith. Physical modelling using digital waveguides. Comput Music J, 16(4):74–91, 1992.

[8] G. Eckel, F. Iovino, and R. Caussé. Sound synthesis by physical modelling with Modalys. In Proceedings of the International Symposium on Musical Acoustics, Dourdan, France, 1995.

[9] D. Morrison and J.-M. Adrien. Mosaic: A framework for modal synthesis. Comput Music J, 17(1):45– 56, 1993.

[10] K. Yee. Numerical solution of initial boundary value problems involving Maxwell's equations in isotropic media. IEEE T. Antenn Propag, 14(3): 302–307, 1966.

[11] J. C. Strikwerda. Finite difference schemes and partial differential equations, 2nd ed. SIAM, Philadelphia, USA, 2004.

[12] P. Ruiz. A technique for simulating the vibrations of strings with a digital computer. Master’s thesis, University of Illinois, 1969.

[13] R. Bacon and J. Bowsher. A discrete model of the struck string. Acustica, 41:21–27, 1978.

[14] X. Boutillon. Model for piano hammers: Experimental determination and digital simulation. J Acoust Soc Am, 83(2):746–754, 1988.

[15] A. Chaigne. On the use of finite differences for musical synthesis. Application to plucked stringed instruments. J Acoustique, 5(2):181–211, 1992.

[16] A. Chaigne and A. Askenfelt. Numerical simulations of struck strings I. A physical model for a struck string using finite difference methods. J Acoust Soc Am, 95(2):1112–1118, 1994.

[17] A. Chaigne and C. Lambourg. Time-domain simulation of damped impacted plates. I. Theory and experiments. J Acoust Soc Am, 109(4):1422–1432, 2001.

[18] V. Välimäki, J. Pakarinen, C. Erkut, and K. Karjalain. Discrete time modeling of musical instruments. Rep Prog Phys, 69:1–78, 2006.

[19] J. Florens, A. Razafindrakoto, A. Luciani, and C. Cadoz. Optimized real time simulation of objects for musical synthesis and animated image synthesis. In Proceedings of the International Computer Music Conference (ICMC1986), Den Haag, The Netherlands, October 1986.

[20] C. Cadoz, L. Lisowski, and J. Florens. A modular feedback keyboard design. Comput Music J, 14(2):47– 51, 1990.

[21] C. Cadoz, A. Luciani, and J.L. Florens. CORDIS-ANIMA: A modeling and simulation system for sound and image synthesis: The general formalism. Comput Music J, 17(1):19–29, 1993.

[22] C. Nichols. The vBow: Development of a Virtual Violin Bow Haptic Human-Computer Interface. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME2002), Dublin, Ireland, May 2002.

[23] C. Nichols. The vBow: A virtual violin bow controller for mapping gesture to synthesis with haptic feedback. Organ Sound, 7(2):215–220, 2002.

[24] R. Oboe and G. De Poli. Multi-instrument virtual keyboard the MIKEY project. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME2002), Dublin, Ireland, May 2002.

[25] D. M. Howard, S. Rimell, and A. D. Hunt. Force feedback gesture controlled physical modelling synthesis. In Proceedings of the International Conference on New Interfaces for Musical Expression (NIME2003), Montreal, Canada, May 2003.

[26] D. M. Howard and S. Rimell. Cymatic: A tactile controlled physical modelling instrument. In Pro- ceedings of the International Conference on Digital Audio Effects (DAFX-03), London, UK, September 2003.

[27] J. Albano. 7 modeled instruments you might not believe are emulations. Ask Audio [Link], March 2017. (Accessed June 2019).

[28] S. Bilbao, B. Hamilton, A. Torin, C. J. Webb, P. Graham, A. Gray, K. Kavoussanakis, and J. Perry. Large scale physical modeling synthesis. In Proceedings Stockholm Musical Acoustics Conference/Sound and Music Computing Conference (SMAC-SMC2013), Stockholm, Sweden, July/August 2013

[29] J. Chabassier, A. Chaigne, and P. Joly. Modeling and simulation of a grand piano. J Acoust Soc Am., 134(1):648–665, 2013.

[30] J. Chabassier and P. Joly. Energy Preserving Schemes for Nonlinear Hamiltonian Systems of Wave Equations. Application to the Vibrating Piano String. Comput Method Appl M, 199:2779–2795, 2010.

[31] J. Chabassier. List of publications on piano modelling. [Link], 2009-2013. (Accessed June 2019).

[32] PAPA. Predictive Approach in Piano Acoustics, 2014-2016. Austrian Science Fund (FWF), grant agreement n M1653 granted to A. Chaigne.

[33] FPM2. From Physical Modelling to Piano Making, 2016-2017. Austrian Science Fund (FWF), grant agreement n P29386 granted to A. Chaigne.

[34] A. Chaigne. Reconstruction of hammer force from string velocity. J Acoust Soc Am, 140(5):3504–3517, 2016.

[35] A. Chaigne, J. Chabassier, and M. Duruflé. Energy analysis of structural changes in pianos. In Proceedings of the Third Vienna Talk on Music Acoustics, Vienna, Austria, September 2015.

[36] A. Chaigne. The making of pianos: A historical view. Musique et technique, 8:10–21, 2017.

[37] MAESSTRO. Modélisations Acoustiques, Expérimentations et Synthèse Sonore pour Tables dhaRmonie de pianO. [Link] (Accessed June 2019), 2014-current. ANR - Agence Nationale de la Recherche, grant agreement ANR-14-CE07-0014.

[38] V. Välimäki, H. Penttinen, J. Knif, M. Laurson, and C. Erkut. Sound synthesis of the harpsichord using a computationally efficient physical model. EURASIP J Adv Sig Pr, 2004(7):860718, 2004

[39] C. Desvages and S. Bilbao. Two-polarisation physical model of bowed strings with nonlinear contact and friction forces, and application to gesture-based sound synthesis. Appl Sci., 6(5):135, 2016.

[40] A. Canclini, L. Mucci, F. Antonacci, A. Sarti, and S. Tubaro. A methodology for estimating the radiation pattern of a violing during the performance. In Proceedings of the European Signal Processing Conference (EUSIPCO), Nice, France, 2015.

[41] M. Buccoli, M. Zanoni, F. Setragno, Sarti A., and F. Antonacci. An unsupervised approach to the semantic description of the sound quality of violins. In Proceedings of the European Signal Processing Conference (EUSIPCO), Nice, France, 2015.

[42] R. Corradi, A. Liberatore, S. Miccoli, F. Antonacci, A. Canclini, A. Sarti, and M. Zanoni. A multi- disciplinary approach to the characterization of bowed string instruments: the Musical Acoustics Lab in Cremona. In Proceedings of the 22nd International Congress on Sound and Vibration (ICSV22), Florence, Italy, 2015.

[43] E. Maestre, G.P. Scavone, and J.O. Smith. Joint modeling of bridge admittance and body radiativity for efficient synthesis of string instrument sound by digital waveguides. IEEE T Audio Speech, 25(5):1128– 1139, 2017.

[44] N. Chadwick, SS An, and DL James. Harmonic shells: a practical nonlinear sound model for near-rigid thin shells. ACM Trans. Graph., 28(5):119, 2009.

[45] M. Müller, J. Stam, D. James, and N. Thürey. Real-time physics: class notes. In ACM SIGGRAPH 2008 classes, Los Angeles, USA, August 2008.

[46] E. Schweickart, D. James, and S. Marschner. Animating elastic rods with sound. ACM Trans. Graph., 36(4):115, 2017.

[47] VRACE. Virtual Reality Audio for Cyber Environments. [Link] (Accessed June 2019), 2019-2022. Marie Sklodowska-Curie Actions/Innovative Training Network, grant agreement No 812719.