Download the file with the complete list of publications

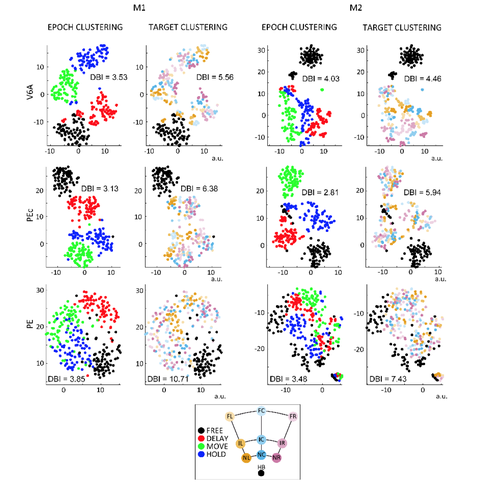

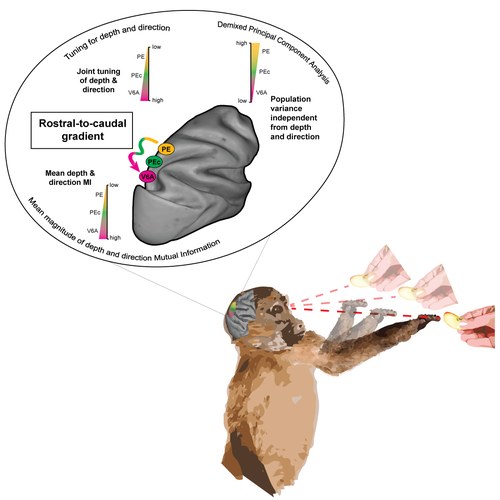

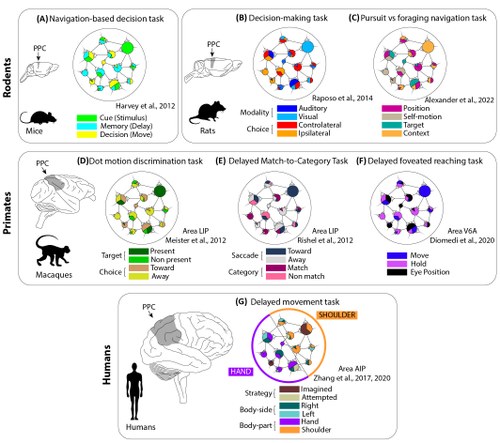

Neuronal populations in parietal and frontal cortices orchestrate a series of visuomotor transformations crucial for executing successful reaching movements. Two key nodes in this network are the dorsal premotor area F2 and the medial posterior parietal area V6A, which are strongly reciprocally connected (Gamberini et al., 2021). Extensive research in premotor areas primarily focused on center-out reaches, which did not account for reach depth. Therefore, it is unknown whether tuning by spatial and motor variables, such as reach direction and depth, is similar in frontal and parietal cortices and whether there are differences in the temporal evolution of activity that reflects functional specializations. To fill this gap, we recorded single-neuron activity from two regions of the medial fronto-parietal circuit in macaques performing an instructed delay reaching task toward targets varying in both direction and depth. We then compared how various spatial and temporal movement parameters are represented both at the neural and population levels across these two network nodes. Our results reveal that neurons in the medial posterior parietal cortex (mPPC) exhibit sharper spatial tuning, with a particularly robust representation of target depth, compared to the broader, more gradual activation patterns observed in the dorsal premotor cortex (PMd). Moreover, greater decoding accuracy for direction and depth has been found in mPPC. These findings suggest that mPPC is specialized for accurate and reliable spatial encoding that is essential for dynamic sensorimotor transformations, whereas PMd may primarily support the planning and initiation of motor actions directed toward a continuum of spatial locations.

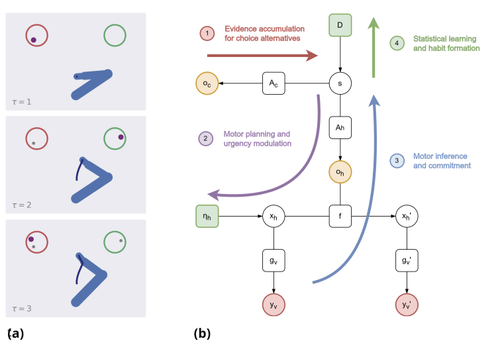

Decision-making is often conceptualized as a serial process, during which sensory evidence is accumulated for the choice alternatives until a certain threshold is reached, at which point a decision is made and an action is executed. This decide-then-act perspective has successfully explained various facets of perceptual and economic decisions in the laboratory, in which action dynamics are usually irrelevant to the choice. However, living organisms often face another class of decisions-called embodied decisions-that require selecting between potential courses of actions to be executed timely in a dynamic environment, e.g., for a lion, deciding which gazelle to chase and how fast to do so. Studies of embodied decisions reveal two aspects of goal-directed behavior in stark contrast to the serial view. First, that decision and action processes can unfold in parallel; second, that action-related components, such as the motor costs associated with selecting a particular choice alternative or required to "change mind" between choice alternatives, exert a feedback effect on the decision taken. Here, we show that these signatures of embodied decisions emerge naturally in active inference-a framework that simultaneously optimizes perception and action, according to the same (free energy minimization) imperative. We show that optimizing embodied choices requires a continuous feedback loop between motor planning (where beliefs about choice alternatives guide action dynamics) and motor inference (where action dynamics finesse beliefs about choice alternatives). Furthermore, our active inference simulations reveal the normative character of embodied decisions in ecological settings - namely, achieving an effective balance between a high accuracy and a low risk of missing valid opportunities.

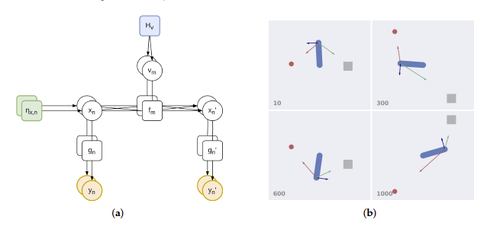

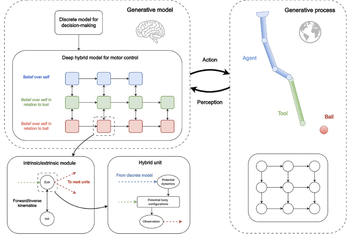

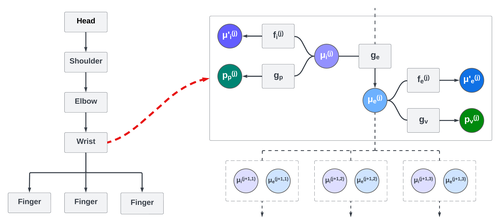

To determine an optimal plan for complex tasks, one often deals with dynamic and hierarchical relationships between several entities. Traditionally, such problems are tackled with optimal control, which relies on the optimization of cost functions; instead, a recent biologically motivated proposal casts planning and control as an inference process. Active inference assumes that action and perception are two complementary aspects of life whereby the role of the former is to fulfill the predictions inferred by the latter. Here, we present an active inference approach that exploits discrete and continuous processing, based on three features: the representation of potential body configurations in relation to the objects of interest; the use of hierarchical relationships that enable the agent to easily interpret and flexibly expand its body schema for tool use; the definition of potential trajectories related to the agent's intentions, used to infer and plan with dynamic elements at different temporal scales. We evaluate this deep hybrid model on a habitual task: reaching a moving object after having picked a moving tool. We show that the model can tackle the presented task under different conditions. This study extends past work on planning as inference and advances an alternative direction to optimal control.

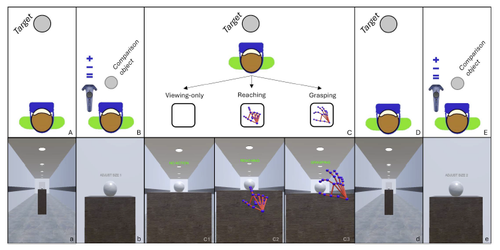

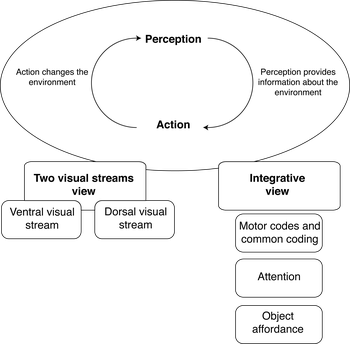

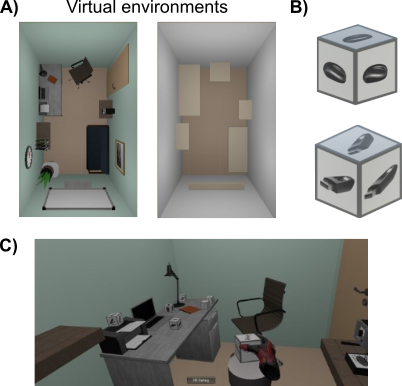

In virtual environments (VEs), distance perception is often inaccurate but can be improved through active engagement, such as walking. While prior research suggests that action planning and execution can enhance the perception of action-related features, the effects of specific actions on perception in VEs remain unclear. This study investigates how different interactions - viewing-only, reaching, and grasping - affect size perception in Virtual Reality (VR) and whether teleportation (Experiment 1) and smooth locomotion (Experiment 2) influences these effects. Participants approached a virtual object using either teleportation or smooth locomotion and interacted with the target with a virtual hand. They then estimated the target's size before and after the approach by adjusting the size of a comparison object. Results revealed that size perception improved after interaction across all conditions in both experiments, with viewing-only leading to the most accurate estimations. This suggests that, unlike in real environments, additional manual interaction does not significantly enhance size perception in VR when only visual input is available. Additionally, teleportation was more effective than smooth locomotion for improving size estimations. These findings extend action-based perceptual theories to VR, showing that interaction type and approach method can influence size perception accuracy without tactile feedback. Further, by analysing gaze spatial distribution during the different interaction conditions, this study suggests that specific motor responses combined with movement approaches affect gaze behaviour, offering insights for applied VR settings that prioritize perceptual accuracy.

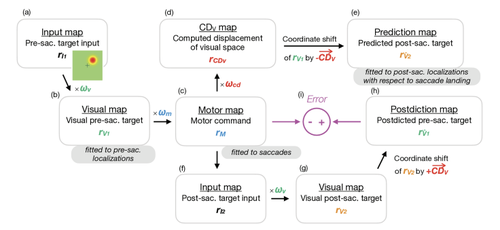

In current computational models on oculomotor learning 'the' movement vector is adapted in response to targeting errors. However, for saccadic eye movements, learning exhibits a spatially distributive nature, i.e. it transfers to surrounding positions. This adaptation field resembles the topographic maps of visual and motor activity in the brain and suggests that learning does not act on the population vector but already on the level of the 2D population response. Here we present a population-based gain field model for saccade adaptation in which sensorimotor transformations are implemented as error-sensitive gain field maps that modulate the population response of visual and motor signals and of the internal saccade estimate based on corollary discharge (CD). We fit the model to saccades and visual target localizations across adaptation, showing that adaptation and its spatial transfer can be explained by locally distributive learning that operates on visual, motor and CD gain field maps. We show that 1) the scaled locality of the adaptation field is explained by population coding, 2) its radial shape is explained by error encoding in polar-angle coordinates, and 3) its asymmetry is explained by an asymmetric shape of learning rates along the amplitude dimension. Learning exhibits the highest peak rate, the widest spatial extension and a pronounced asymmetry in the motor domain, while in the visual and the internal saccade domain learning appears more localized. Moreover, our results suggest that the CD-based internal saccade representation has a response field that monitors only part of the ongoing saccade changes during learning. Our framework opens the door to study spatial generalization and interference of learning in multiple contexts.

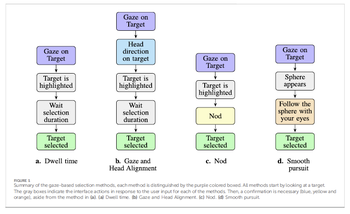

Introduction: Extended reality (XR) technologies, particularly gaze-based interaction methods, have evolved significantly in recent years to improve accessibility and reach broader user communities. While previous research has improved the simplicity and inclusivity of gaze-based choice, the adaptability of such systems - particularly in terms of user comfort and fault tolerance - has not yet been fully explored.

Methods: In this study, four gaze-based interaction techniques were examined in a visual search game in virtual reality (VR). A total of 52 participants were involved. The techniques tested included selection by dwell time, confirmation by head orientation, nodding and smooth pursuit eye movements. Both subjective and objective performance measures were assessed, using the NASA-TLX for perceived task load and time to complete the task and score for objective evaluation.

Results: Significant differences were found between the interaction techniques in terms of NASA-TLX dimensions, target search time and overall performance. The results indicate different levels of efficiency and intuitiveness of each method. Gender differences in interaction preferences and cognitive load were also found.

Discussion: These findings highlight the importance of personalizing gaze-based VR interfaces to the individual user to improve accessibility, reduce cognitive load and enhance the user experience. Personalizing gaze interaction strategies can support more inclusive and effective VR systems that benefit both general and accessibility-focused populations.

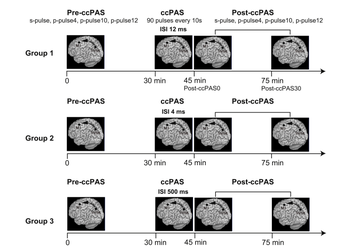

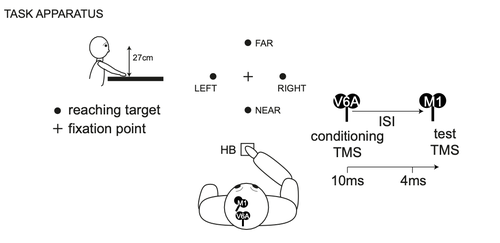

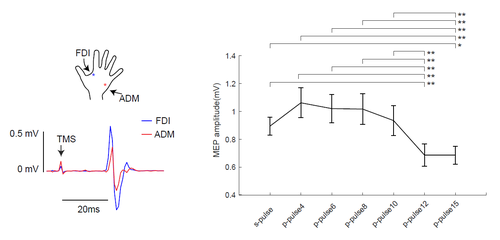

Cortico-cortical paired associative stimulation (ccPAS) is a powerful transcranial magnetic stimulation (TMS) protocol thought to rely on Hebbian plasticity and known to strengthen effective connectivity, mainly within frontal lobe networks. Here, we expand on previous work by exploring the effects of ccPAS on the pathway linking the medial posterior parietal area hV6A with the primary motor cortex (M1), whose plasticity mechanisms remain largely unexplored. To assess the effective connectivity of the hV6A-M1 network, we measured motor-evoked potentials (MEPs) in 30 right-handed volunteers at rest during dual-site, paired-pulse TMS. Consistent with previous findings, we found that MEPs were inhibited when the conditioning stimulus over hV6A preceded the test stimulus over M1 by 12 ms, highlighting inhibitory hV6A-M1 causal interactions. We then manipulated the hV6A-M1 circuit via ccPAS using different inter-stimulus intervals (ISI) never tested before. Our results revealed a time-dependent modulation. Specifically, only when the conditioning stimulus preceded the test one by 12 ms did we find a gradual increase of MEP amplitude during ccPAS, and excitatory aftereffects. In contrast, when ccPAS was applied with an ISI of 4 ms or 500 ms, no corticospinal excitability changes were observed, suggesting that temporal specificity is a critical factor in modulating the hV6A-M1 network. These results suggest that ccPAS can induce time-dependent Hebbian plasticity in the dorsomedial parieto-frontal network at rest, offering novel insights into the network's plasticity and temporal dynamics.

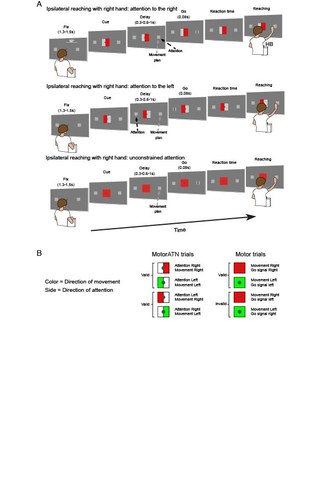

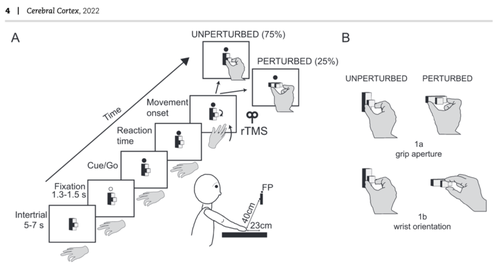

The interplay between attention, alertness, and motor planning is crucial for our manual interactions. To investigate the neural bases of this interaction and challenge the views that attention cannot be disentangled from motor planning, we instructed human volunteers of both sexes to plan and execute reaching movements while attending to the target, while attending elsewhere, or without constraining attention. We recorded reaction times to reach initiation and pupil diameter and interfered with the functions of the medial posterior parietal cortex (mPPC) with online repetitive transcranial magnetic stimulation to test the causal role of this cortical region in the interplay between spatial attention and reaching. We found that mPPC plays a key role in the spatial association of reach planning and covert attention. Moreover, we have found that alertness, measured by pupil size, is a good predictor of the promptness of reach initiation only if we plan a reach to attended targets, and mPPC is causally involved in this coupling. Different from previous understanding, we suggest that mPPC is neither involved in reach planning per se, nor in sustained covert attention in the absence of a reach plan, but it is specifically involved in attention functional to reaching.

By dynamic planning, we refer to the ability of the human brain to infer and impose motor trajectories related to cognitive decisions. A recent paradigm, active inference, brings fundamental insights into the adaptation of biological organisms, constantly striving to minimize prediction errors to restrict themselves to life-compatible states. Over the past years, many studies have shown how human and animal behaviors could be explained in terms of active inference – either as discrete decision-making or continuous motor control – inspiring innovative solutions in robotics and artificial intelligence. Still, the literature lacks a comprehensive outlook on effectively planning realistic actions in changing environments. Setting ourselves the goal of modeling complex tasks such as tool use, we delve into the topic of dynamic planning in active inference, keeping in mind two crucial aspects of biological behavior: the capacity to understand and exploit affordances for object manipulation, and to learn the hierarchical interactions between the self and the environment, including other agents. We start from a simple unit and gradually describe more advanced structures, comparing recently proposed design choices and providing basic examples. This study distances itself from traditional views centered on neural networks and reinforcement learning, and points toward a yet unexplored direction in active inference: hybrid representations in hierarchical models.

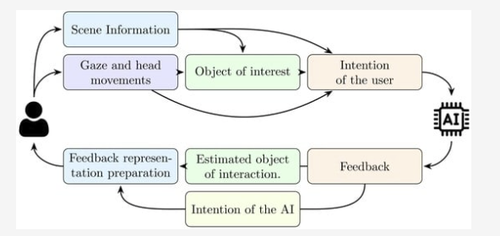

Bi-directional gaze-based communication offers an intuitive and natural way for users to interact with systems. This approach utilizes the user’s gaze not only to communicate intent but also to obtain feedback, which promotes mutual understanding and trust between the user and the system. In this review, we explore the state of the art in gaze-based communication, focusing on both directions: From user to system and from system to user. First, we examine how eye-tracking data is processed and utilized for communication from the user to the system. This includes a range of techniques for gaze-based interaction and the critical role of intent prediction, which enhances the system’s ability to anticipate the user’s needs. Next, we analyze the reverse pathway—how systems provide feedback to users via various channels, highlighting their advantages and limitations. Finally, we discuss the potential integration of these two communication streams, paving the way for more intuitive and efficient gaze-based interaction models, especially in the context of Artificial Intelligence. Our overview emphasizes the future prospects for combining these approaches to create seamless, trust-building communication between users and systems. Ensuring that these systems are designed with a focus on usability and accessibility will be critical to making them effective communication tools for a wide range of users.

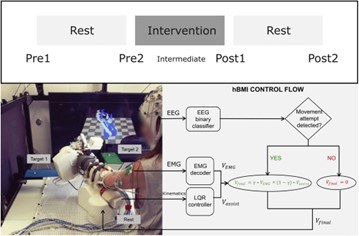

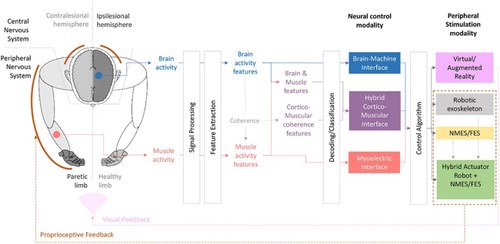

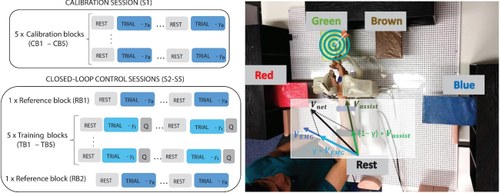

The primary constraint of non-invasive brain-machine interfaces (BMIs) in stroke rehabilitation lies in the poor spatial resolution of motor intention related neural activity capture. To address this limitation, hybrid brain-muscle-machine interfaces (hBMIs) have been suggested as superior alternatives. These hybrid interfaces incorporate supplementary input data from muscle signals to enhance the accuracy, smoothness and dexterity of rehabilitation device control. Nevertheless, determining the distribution of control between the brain and muscles is a complex task, particularly when applied to exoskeletons with multiple degrees of freedom (DoFs). Here we present a feasibility, usability and functionality study of a bio-inspired hybrid brain-muscle machine interface to continuously control an upper limb exoskeleton with 7 DoFs. The system implements a hierarchical control strategy that follows the biologically natural motor command pathway from the brain to the muscles. Additionally, it employs an innovative mirror myoelectric decoder, offering patients a reference model to assist them in relearning healthy muscle activation patterns during training. Furthermore, the multi-DoF exoskeleton enables the practice of coordinated arm and hand movements, which may facilitate the early use of the affected arm in daily life activities. In this pilot trial six chronic and severely paralyzed patients controlled the multi-DoF exoskeleton using their brain and muscle activity. The intervention consisted of 2 weeks of hBMI training of functional tasks with the system followed by physiotherapy. Patients’ feedback was collected during and after the trial by means of several feedback questionnaires. Assessment sessions comprised clinical scales and neurophysiological measurements, conducted prior to, immediately following the intervention, and at a 2-week follow-up. Patients’ feedback indicates a great adoption of the technology and their confidence in its rehabilitation potential. Half of the patients showed improvements in their arm function and 83% improved their hand function. Furthermore, we found improved patterns of muscle activation as well as increased motor evoked potentials after the intervention. This underscores the significant potential of bio-inspired interfaces that engage the entire nervous system, spanning from the brain to the muscles, for the rehabilitation of stroke patients, even those who are severely paralyzed and in the chronic phase.

Biologically plausible spiking neural network models of sensory cortices can be instrumental in understanding and validating their principles of computation. Models based on Cortical Computational Primitives (CCPs), such as Hebbian plasticity and Winner-Take-All (WTA) networks, have already been successful in this approach. However, the specific nature and roles of CCPs in sensorimotor cortices during cognitive tasks are yet to be fully deciphered. The evolution of motor intention in the Posterior Parietal Cortex (PPC) before arm-reaching movements is a well-suited cognitive process to assess the effectiveness of different CCPs. To this end, we propose a biologically plausible model composed of heterogeneous spiking neurons which implements and combines multiple CCPs, such as multi-timescale learning and soft WTA modules. By training the model to replicate the dynamics of in-vivo recordings from non-human primates, we show how it is effective in generating meaningful representations from unbalanced input data, and in faithfully reproducing the transition from motor planning to action selection. Our findings elucidate the importance of distributing spike-based plasticity across multi-timescales, and provide an explanation for the role of different CCPs in models of frontoparietal cortical networks for performing multisensory integration to efficiently inform action execution.

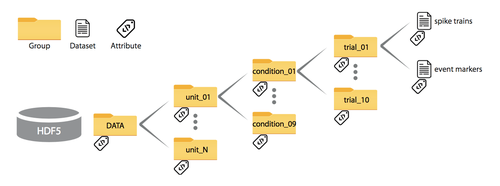

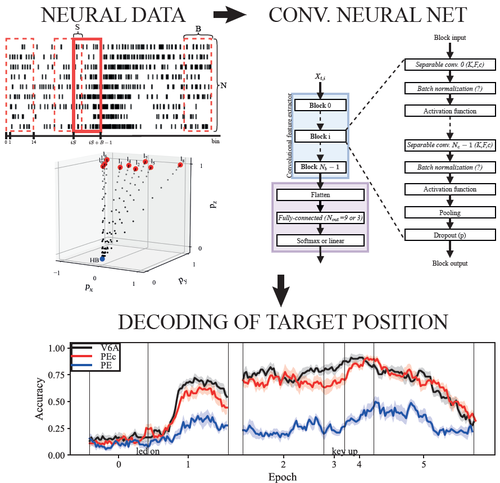

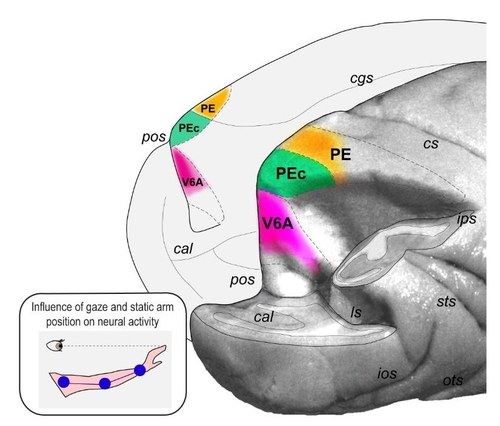

Facilitating data sharing in scientific research, especially in the domain of animal studies, holds immense value, particularly in mitigating distress and enhancing the efficiency of data collection. This study unveils a meticulously curated collection of neural activity data extracted from six electrophysiological datasets recorded from three parietal areas (V6A, PEc, PE) of two Macaca fascicularis during an instructed-delay foveated reaching task. This valuable resource is now accessible to the public, featuring spike timestamps, behavioural event timings and supplementary metadata, all presented alongside a comprehensive description of the encompassing structure. To enhance accessibility, data are stored as HDF5 files, a convenient format due to its flexible structure and the capability to attach diverse information to each hierarchical sub-level. To guarantee ready-to-use datasets, we also provide some MATLAB and Python code examples, enabling users to quickly familiarize themselves with the data structure.

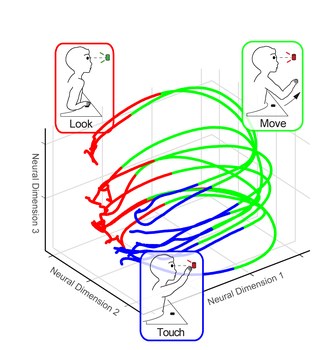

Attention is needed to perform goal-directed vision-guided movements. We investigated whether the direction of covert attention modulates movement outcomes and dynamics. Right-handed and left-handed volunteers attended to a spatial location while planning a reach toward the same hemifield, the opposite one, or planned a reach without constraining attention. We measured behavioral variables as outcomes of ipsilateral and contralateral reaching and the tangling of behavioral trajectories obtained through principal component analysis as a measure of the dynamics of motor control. We found that the direction of covert attention had significant effects on the dynamics of motor control, specifically during contralateral reaching. Data suggest that motor control was more feedback-driven when attention was directed leftward than when attention was directed rightward or when it was not constrained, irrespectively of handedness. These results may help to better understand the neural bases of asymmetrical neurological diseases like hemispatial neglect.

We recently found that discrete neural states are associated with reaching movements in the two contiguous areas of the posterior cortex (namely, area V6A and Pec). This means that the networks of neurons in these regions are differently active during different phases of arm movements.

In our last study, expanding the previous work, the Hidden Markov Model (HMM, the algorithm capable of identifying neural states) revealed that also another parietal area (PE) follow a similar sequence of states. Thus, we moved a step forward and, using a coupled clustering and decoding approach, we demonstrated that these neural states carried behaviour-related information in all three parietal areas. However, when comparing decoding accuracy, PE was less informative than V6A and PEc. Furthermore, V6A outperformed PEc in target inference, indicating functional differences between the parietal areas. To test the consistency of these differences, we used a supervised and unsupervised variant of the HMM and compared its performance with two more common classifiers, Support Vector Machine and Long-Short Term Memory. The decoding differences between the areas were invariant to the algorithm used, still showing the dissimilarities found with the HMM, thus indicating that these dissimilarities are intrinsic to the information encoded by the parietal neurons.

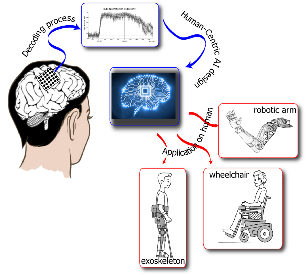

These results show that, when decoding from the parietal cortex, e.g. in implementations of brain-machine interfaces, attention must be paid to the selection of the most suitable neural signal source, given the great heterogeneity of this cortical sector.

More than 85% of stroke survivors suffer from different degrees of disability for the rest of their lives. They will require support that can vary from occasional to full time assistance. These conditions are also associated to an enormous economic impact for their families and health care systems. Current rehabilitation treatments have limited efficacy and their long-term effect is controversial. Here we review different challenges related to the design and development of neural interfaces for rehabilitative purposes. We analyze current bibliographic evidence of the effect of neuro-feedback in functional motor rehabilitation of stroke patients. We highlight the potential of these systems to reconnect brain and muscles. We also describe all aspects that should be taken into account to restore motor control. Our aim with this work is to help researchers designing interfaces that demonstrate and validate neuromodulation strategies to enforce a contingent and functional neural linkage between the central and the peripheral nervous system. We thus give clues to design systems that can improve or/and re-activate neuroplastic mechanisms and open a new recovery window for stroke patients.

How can we realize sophisticated purposeful movements? While agile motor control is now standard in robotics, the computational machinery supporting this capacity in biological organisms remains controversial. A theory called "active inference" assumes that actions arise by inferring limb trajectories from the desired effects in an exteroceptive (e.g., visual) space. Yet, how this theory affords efficient motor control is unclear. Our innovative solution rests on designing a hierarchical kinematic model that mimics the body structure and predicts the exteroceptive effects of movements. In particular, a simple but effective mapping from extrinsic to intrinsic coordinates is realized via inference, and it is then scaled up to drive complex kinematic chains. Rich goals can be specified in both domains using attractive or repulsive forces, allowing an agent to perform tasks like drawing a circle, reaching and avoiding objects. The proposed model reproduces sophisticated bodily movements and paves the way for computationally efficient and biologically plausible control of actuated systems.

Successful behaviour relies on the appropriate interplay between action and perception. The well-established dorsal and ventral stream theories depicted two distinct functional pathways for the processes of action and perception, respectively. In physiological conditions, the two pathways closely cooperate in order to produce successful adaptive behaviour. As the coupling between perception and action exists, this requires an interface that is responsible for a common reading of the two functions. Several studies have proposed different types of perception and action interfaces, suggesting their role in the creation of the shared interaction channel. In the present review, we describe three possible perception and action interfaces: i) the motor code, including common coding approaches, ii) attention, and iii) object affordance; we highlight their potential neural correlates. From this overview, a recurrent neural substrate that underlies all these interface functions appears to be crucial: the parieto-frontal circuit. Within this framework, the present review proposes a novel model that is inclusive of the superior parietal regions and their relative contribution to the different action and perception interfaces.

The motor disability due to stroke compromises the autonomy of patients and caregivers.To support autonomy and other personal and social needs, trustworthy, multifunctional, adaptive, and interactive assistive devices represent optimal solutions. To fulfil this aim, an artificial intelligence system named MAIA would aim to interpret users' intentions and translate them into actions performed by assistive devices. Analysing their perspectives is essential to develop the MAIA system operating in harmony with patients' and caregivers' needs as much as possible.Method: Post-stroke patients and caregivers were interviewed to explore the impact of motor disability on their lives, previous experiences with assistive technologies, opinions, and attitudes about MAIA and their needs. Interview transcripts were analysed using inductive thematic analysis.Results: Sixteen interviews were conducted with twelve post-stroke patients and four caregivers.Three themes emerged: 1) Needs to be satisfied, 2) MAIA technology acceptance, and 3) Perceived trustfulness. Overall, patients are seeking rehabilitative technology, contrary to caregivers needing assistive technology to help them daily. An easy-to-use and ergonomic technology is preferable.However, a few participants trust a system based on artificial intelligence.Conclusions: An interactive artificial intelligence technology could help post-stroke patients and their caregivers to restore motor autonomy. The insights from participants to develop the system depends on their motor ability and the role of patients or caregiver. Although technology grows exponentially, more efforts are needed to strengthen people's trust in advanced technology.

All our dexterous movements depend on the correct functioning of the network of brain areas. Knowing the functional timing of these networks is useful to gain a deeper understanding of how the brain works to enable accurate arm movements. In this article, we probed the parieto-frontal network and demonstrated that it takes 4 ms for the medial posterior parietal cortex to send inhibitory signals to the frontal cortex during reach planning. This fast flow of information seems not to be dependent on the availability of visual information regarding the reaching target. This study opens the way for future studies to test how this timing could be impaired in different neurological disorders.

We advance a novel active inference model of the cognitive processing that underlies the acquisition of a hierarchical action repertoire and its use for observation, understanding and imitation. We illustrate the model in four simulations of a tennis learner who observes a teacher performing tennis shots, forms hierarchical representations of the observed actions, and imitates them. Our simulations show that the agent's oculomotor activity implements an active information sampling strategy that permits inferring the kinematic aspects of the observed movement, which lie at the lowest level of the action hierarchy. In turn, this low-level kinematic inference supports higher-level inferences about deeper aspects of the observed actions: proximal goals and intentions. Finally, the inferred action representations can steer imitative responses, but interfere with the execution of different actions. Our simulations show that hierarchical active inference provides a unified account of action observation, understanding, learning and imitation and helps explain the neurobiological underpinnings of visuomotor cognition, including the multiple routes for action understanding in the dorsal and ventral streams and mirror mechanisms.

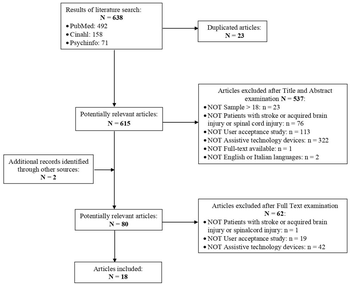

Acquired motor limits can be provoked by neurological lesions. Independently of the aetiologies, the lesions require patients to develop new coping strategies and adapt to the changed motor functionalities. In all of these occasions, what is defined as an assistive technology (AT) may represent a promising solution. The present work is a systematic review of the scientific AT-related literature published in the PubMed, Cinahl, and Psychinfo databases up to September 2022. This review was undertaken to summarise how the acceptance of AT is assessed in people with motor deficits due to neurological lesions. We review papers that (1) dealt with adults (≥18 years old) with motor deficits due to spinal cord or acquired brain injuries and (2) concerned user acceptance of hard AT. A total amount of 615 studies emerged, and 18 articles were reviewed according to the criteria. The constructs used to assess users’ acceptance mainly entail people’s satisfaction, ease of use, safety and comfort. Moreover, the acceptance constructs varied as a function of participants’ injury severity. Despite the heterogeneity, acceptability was mainly ascertained through pilot and usability studies in laboratory settings. Furthermore, ad-hoc questionnaires and qualitative methods were preferred to unstandardized protocols of measurement. This review highlights how people living with acquired motor limits greatly appreciate ATs. On the other hand, methodological heterogeneity indicates that evaluation protocols should be systematized and finely tuned.

Motor learning mediated by motor training has in the past been explored for rehabilitation. Myoelectric interfaces together with exoskeletons allow patients to receive real-time feedback about their muscle activity. However, the number of degrees of freedom that can be simultaneously controlled is limited, which hinders the training of functional tasks and the effectiveness of the rehabilitation therapy. The objective of this study was to develop a myoelectric interface that would allow multi-degree-of-freedom control of an exoskeleton involving arm, wrist and hand joints, with an eye toward rehabilitation. We tested the effectiveness of a myoelectric decoder trained with data from one upper limb and mirrored to control a multi-degree-of-freedom exoskeleton with the opposite upper limb (i.e., mirror myoelectric interface) in 10 healthy participants. We demonstrated successful simultaneous control of multiple upper-limb joints by all participants. We showed evidence that subjects learned the mirror myoelectric model within the span of a five-session experiment, as reflected by a significant decrease in the time to execute trials and in the number of failed trials. These results are the necessary precursor to evaluating if a decoder trained with EMG from the healthy limb could foster learning of natural EMG patterns and lead to motor rehabilitation in stroke patients.

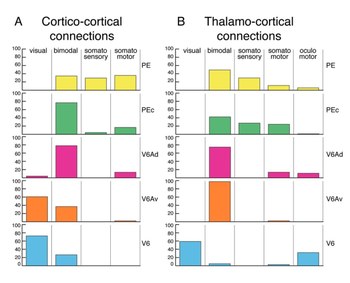

A major issue in modern neuroscience is to understand how cell populations present multiple spatial and motor features during goal-directed movements. The direction and distance (depth) of arm movements often appear to be controlled independently during behavior, but it is unknown whether they share neural resources or not. Using information theory, singular value decomposition, and dimensionality reduction methods, we compare direction and depth effects and their convergence across three parietal areas during an arm movement task. All methods show a stronger direction effect during early movement preparation, whereas depth signals prevail during movement execution. Going from anterior to posterior sectors, we report an increased number of cells processing both signals and stronger depth effects. These findings suggest a serial direction and depth processing consistent with behavioral evidence and reveal a gradient of joint versus independent control of these features in parietal cortex that supports its role in sensorimotor transformations.

In the past, neuroscience was focused on individual neurons seen as the functional units of the nervous system, but this approach fell short over time to account for new experimental evidence, especially for what concerns associative and motor cortices. For this reason and thanks to great technological advances, a part of modern research has shifted the focus from the responses of single neurons to the activity of neural ensembles, now considered the real functional units of the system. However, on a microscale, individual neurons remain the computational components of these networks, thus the study of population dynamics cannot prescind from studying also individual neurons which represent their natural substrate. In this new framework, ideas such as the capability of single cells to encode a specific stimulus (neural selectivity) may become obsolete and need to be profoundly revised. One step in this direction was made by introducing the concept of “mixed selectivity,” the capacity of single cells to integrate multiple variables in a flexible way, allowing individual neurons to participate in different networks. In this review, the Authors outline the most important features of mixed selectivity and they also present recent works demonstrating its presence in the associative areas of the posterior parietal cortex. Finally, in discussing these findings, the Authors pose some open questions that could be addressed by future studies.

The dexterous control of our grasping actions relies on the cooperative activation of many brain areas. In the parietal lobe, two grasp-related areas collaborate to orchestrate an accurate grasping action: dorsolateral area AIP and dorsomedial area V6A. Single-cell recordings in monkeys and fMRI studies in humans have suggested that both these areas specify grip aperture and wrist orientation, but encode these grasping parameters differently, depending on the context. To elucidate the causal role of phAIP and hV6A, we stimulated these areas while participants were performing grasping actions (unperturbed grasping). rTMS over phAIP impaired the wrist orientation process, whereas stimulation over hV6A impaired grip aperture encoding. In a small percentage of trials, an unexpected reprogramming of grip aperture or wrist orientation was required (perturbed grasping). In these cases, rTMS over hV6A or over phAIP impaired reprogramming of both grip aperture and wrist orientation. These results represent the first direct demonstration of a different encoding of grasping parameters by two grasp-related parietal areas.

Despite the well-recognized role of the posterior parietal cortex (PPC) in processing sensory information to guide action, the differential encoding properties of this dynamic processing, as operated by different PPC brain areas, are scarcely known. Within the monkey’s PPC, the superior parietal lobule hosts areas V6A, PEc, and PE included in the dorso-medial visual stream that is specialized in planning and guiding reaching movements. In this study, a Convolutional Neural Network (CNN) approach is used to investigate how the information is processed in these areas. We trained two macaque monkeys to perform a delayed reaching task towards 9 positions (distributed on 3 different depth and direction levels) in the 3D peripersonal space. The activity of single cells was recorded from V6A, PEc, PE and fed to convolutional neural networks that were designed and trained to exploit the temporal structure of neuronal activation patterns, to decode the target positions reached by the monkey. Bayesian Optimization was used to define the main CNN hyper-parameters. In addition to discrete positions in space, we used the same network architecture to decode plausible reaching trajectories. We found that data from the most caudal V6A and PEc areas outperformed PE area in the spatial position decoding. The results support a dynamic encoding of the different phases and properties of the reaching movement differentially distributed over a network of interconnected areas. This study highlights the usefulness of neurons’ firing rate decoding via CNNs to improve our understanding of how sensorimotor information is encoded in PPC to perform reaching movements. The obtained results may have implications in the perspective of novel neuroprosthetic devices based on the decoding of these rich signals for faithfully carrying out patient’s intentions.

In daily life, we often are confronted with a combination of a visual search task and subsequent grasping of an object. Visual search research demonstrates strong evidence that the scene context affects where and how often we gaze within a given scene. In a grasping task, it is yet unknown whether different phases of object manipulation, such as reaching the object or transporting it from one location to another, are affected by the scene context. The present study investigated the effect of the scene context on human object manipulation in an interactive task in a realistic scenario. Specifically, pick-and-place task performance was evaluated while placing the objects of interest into typical everyday visual scenes. As the main result of the present study, the scene context had a significant effect on the search phase, but not on the transport and reach phases of the task. This strengthens the validity of transferring eye and hand movement knowledge in a grasping task performed in an isolated setting to a realistic scenario within a context-rich environment. The findings of this work provide insights into the potential development of supporting intention predicting systems.

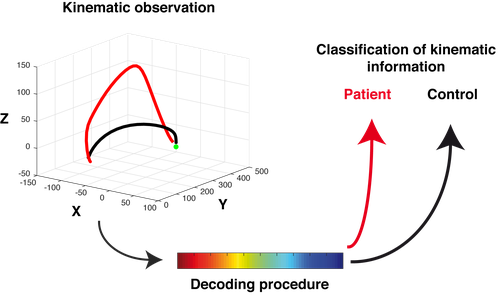

Patients with lesions of the parieto-occipital cortex typically misreach visual targets that they correctly perceive (optic ataxia). Although optic ataxia was described more than 30 years ago, distinguishing this condition from physiological behavior using kinematic data is still far from being an achievement. In this study, combining kinematic analysis with machine learning methods, we compared the reaching performance of a patient with bilateral occipitoparietal damage with that of 10 healthy controls. Specifically, accurate predictions of the patient’s deviations were detected after the 20% of the movement execution in all the spatial positions tested. This classification based on initial trajectory decoding was possible for both directional and depth components of the movement, suggesting the possibility of applying this method to characterize pathological motor behavior.

Highlights

Parietal area V6A encodes sensory and motor activity in goal-directed arm movements

V6A acts as a state estimator of the arm position and movement

Gaze-dependent visual neurons and real-position cells encode action target in V6A

V6A uses the spotlight of attention to guide goal-directed movements of the hand

V6A hosts a priority map for the guidance of reaching arm movement

Acquired brain injury and spinal cord injury are leading causes of severe motor disabilities impacting a person’s autonomy and social life. Enhancing neurological recovery driven by neurogenesis and neuronal plasticity could represent future solutions; however, at present, recovery of activities employing assistive technologies integrating artificial intelligence is worthy of examining. MAIA (Multifunctional, adaptive, and interactive AI system for Acting in multiple contexts) is a human-centered AI aiming to allow end-users to control assistive devices naturally and efficiently by using continuous bidirectional exchanges among multiple sensorimotor information.

Aimed at exploring the acceptability of MAIA, semi-structured interviews (both individual interviews and focus groups) are used to prompt possible end-users (both patients and caregivers) to express their opinions about expected functionalities, outfits, and the services that MAIA should embed, once developed, to fit end-users needs.

End-user indications are expected to interest MAIA technical, health-related, and setting components. Moreover, psycho-social issues are expected to align with the technology acceptance model. In particular, they are likely to involve intrinsic motivational and extrinsic social aspects, aspects concerning the usefulness of the MAIA system, and the related ease to use. At last, we expect individual factors to impact MAIA: gender, fragility levels, psychological aspects involved in the mental representation of body image, personal endurance, and tolerance toward AT-related burden might be the aspects end-users rise in evaluating the MAIA project.

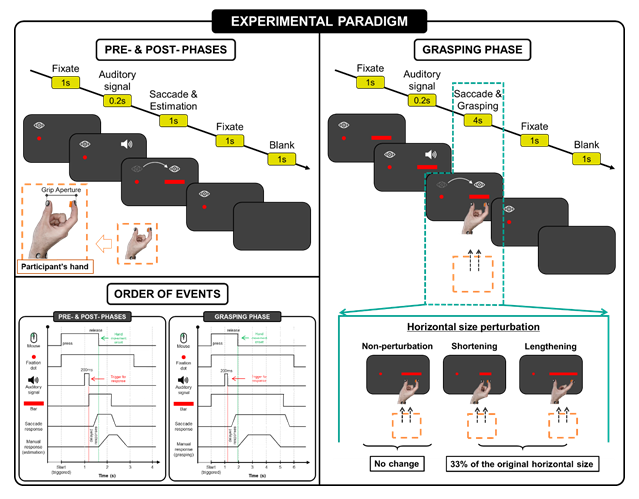

Perception and action are essential aspects in our day-to-day interactions with the environment. When we reach to grasp an object, the hand is pre-shaped according to the perceived intrinsic properties of the object, like size and shape. Within this context, years ago the dual-stream theory was proposed, suggesting independent streams for perception and action. However, nowadays the idea of a close action-perception coupling has gained momentum, suggesting that both processes (perception and action) are not entirely separate, but interact with each other, sharing a common representational code. The scientific literature on the effects of actions on perception is not very extensive. Some studies have reported how orientation tasks are influenced by the action plan, or even how the grasping context can alter size perception. These assumptions suggest that perception can be influenced by the action context, nevertheless, it remains to be known how motor actions and unpredictable size perturbations during action executions could influence perception.

In this study, we aimed to determine whether size perception and saccade amplitude before and after grasping movements were modified by horizontal size perturbations during movement execution and under the presence or absence of tactile feedback.

Two main discoveries were found. First, the horizontal size perception is modulated by the execution of grasping movements and, moreover, it relies on the presence of a horizontal size perturbation during the grasping action. Second, saccade responses exhibited amplitude modifications according to the types of target perturbations occurred in the previous action execution. These findings suggest that the combination of manual perceptual reports and corresponding saccade amplitudes are descriptive parameters of previous motor actions.

The superior parietal lobule (SPL) integrates somatosensory, motor, and visual signals to dynamically control arm movements. During reaching, visual and gaze signals are used to guide the hand to the desired target location, while proprioceptive signals allow to correct arm trajectory, and keep the limb in the final position at the end of the movement. Three SPL areas are particularly involved in this process: V6A, PEc, PE. Here, we evaluated the influence of eye and arm position on single neuron activity of these areas during the holding period at the end of arm reaching movements, when the arm is motionless and gaze and hand positions are aligned. Two male macaques (Macaca fascicularis) performed a foveal reaching task while single unit activity was recorded from areas V6A, PEc, and PE. We found that at the end of reaching movements the neurons of all these areas were modulated by both eye position and static position of the arm. V6A and PEc showed a prevalent combination of gaze and proprioceptive input, while PE seemed to encode these signals more independently. Our results demonstrate that all these SPL areas combine gaze and proprioceptive input to provide an accurate monitoring of arm movements.

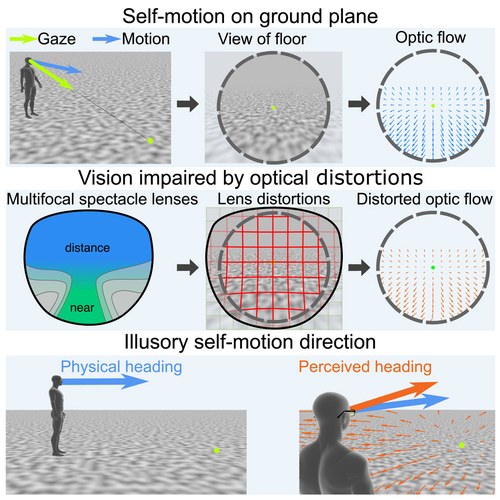

Progressive addition lenses (PALs) are ophthalmic lenses to correct presbyopia by providing improvements of near and far vision in different areas of the lens, but distorting the periphery of the wearer's field of view. Distortion-related difficulties reported by PAL wearers include unnatural self-motion perception. Visual self-motion perception is guided by optic flow, the pattern of retinal motion produced by self-motion. We tested the influence of PAL distortions on optic flow-based heading estimation using a model of heading perception and a virtual reality-based psychophysical experiment. The model predicted changes of heading estimation along a vertical axis, depending on visual field size and gaze direction. Consistent with this prediction, participants experienced upwards deviations of self-motion when gaze through the periphery of the lens was simulated, but not for gaze through the center. We conclude that PALs may lead to illusions of self-motion which could be remedied by a careful gaze strategy.

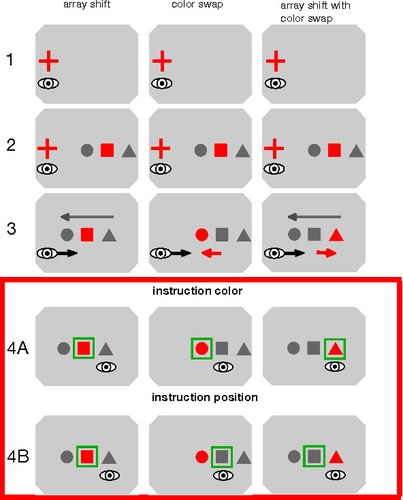

Saccadic eye movements bring objects of interest onto our fovea. These gaze shifts are essential for visual perception of our environment and the interaction with the objects within it. They precede our actions and are thus modulated by current goals. It is assumed that saccadic adaptation, a recalibration process that restores saccade accuracy in case of error, is mainly based on an implicit comparison of expected and actual post-saccadic position of the target on the retina. However, there is increasing evidence that task demands modulate saccade adaptation and that errors in task performance may be sufficient to induce changes to saccade amplitude. We investigated if human participants are able to flexibly use different information sources within the post-saccadic visual feedback in task-dependent fashion. Using intra-saccadic manipulation of the visual input, participants were either presented with congruent post-saccadic information, indicating the saccade target unambiguously, or incongruent post-saccadic information, creating conflict between two possible target objects. Using different task instructions, we found that participants were able to modify their saccade behavior such that they achieved the goal of the task. They succeeded in decreasing saccade gain or maintaining it, depending on what was necessary for the task, irrespective of whether the post-saccadic feedback was congruent or incongruent. It appears that action intentions prime task-relevant feature dimensions and thereby facilitated the selection of the relevant information within the post-saccadic image. Thus, participants use post-saccadic feedback flexibly, depending on their intentions and pending actions.

The medial posterior parietal cortex (PPC) is involved in the complex processes of visuomotor integration. Its connections to the dorsal premotor cortex, which in turn is connected to the primary motor cortex (M1), complete the fronto-parietal network that supports important cognitive functions in the planning and execution of goal-oriented movements. In this study, we wanted to investigate the time-course of the functional connectivity at rest between the medial PPC and the M1 using dual-site transcranial magnetic stimulation in healthy humans. We stimulated the left M1 using a suprathreshold test stimulus to elicit motor-evoked potentials in the hand, and a subthreshold conditioning stimulus was applied over the left medial PPC at different inter-stimulus intervals (ISIs). The conditioning stimulus affected the M1 excitability depending on the ISI, with inhibition at longer ISIs (12 and 15 ms). We suggest that these modulations may reflect the activation of different parieto-frontal pathways, with long latency inhibitions likely recruiting polisynaptic pathways, presumably through anterolateral PPC.

In this paper, we trained multiple machine learning models to investigate if data from contemporary virtual reality hardware enables long- and short-term locomotion predictions - which is important for the MAIA project.

To create our data set, 18 participants walked through a virtual environment with different tasks. The recorded positional, orientation- and eye-tracking data was used to train a machine learning model predicting the future walking targets. Our results showed that the best model to predict the next time step was a Long short-term memory (LSTM) model using positional and orientation data with a mean error of 5.14 mm. The best model for predictions of 2.5 s was an LSTM using positional, orientation and eye-tracking data with a mean error of 65.73 cm. Gaze data offered the greatest predictive utility for long-term predictions of short distances. Our findings indicate that an LSTM model can be used to predict walking paths in VR. Moreover, our results suggest that eye-tracking data provides an advantage for this task.

Understanding the control of movement exerted by motor cortices is challenging and even more so for the associative areas of parietal cortex where many inputs are integrated. Moreover, while single neuron approaches can address how neural computations are carried out, we need to consider the entire neural population activity to get insight on what the brain is computing.

In our recent article entitled “Motor-like neural dynamics in two parietal areas during arm reaching”, published in the journal Progress in Neurobiology, using a population approach that modelled neural activity as a Hidden Markov process, we showed striking similarities between frontal and parietal cortex neural dynamics during arm movements in real, 3D space. Our findings are in agreement with recently proposed models of sensorimotor circuits and our approach can be further applied at the network level using simultaneous neural recordings from parietal and frontal areas.

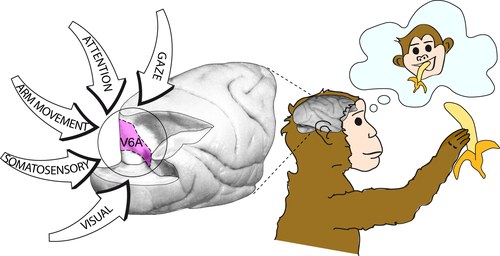

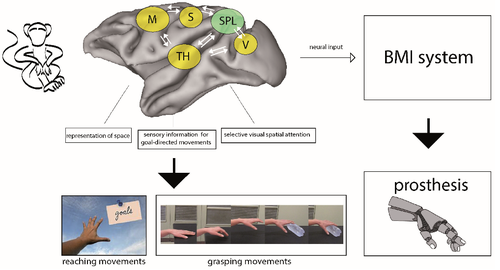

In macaques, the superior parietal lobule (SPL) integrates information from various cortical sectors like visual (V) motor (M) and somatosensory (S) cortex and from the thalamus (TH) and thus forms an interface between perception and action. Recent functional imaging studies have identified putative human homologues of several macaque SPL areas. In our review article entitled "The superior parietal lobule of primates: a sensory-motor hub for interaction with the environment" published in the Special Issue: The sensory-cognitive Interplay: Insights into the neural mechanisms and circuits of the Journal of Integrative Neuroscience (2021, Vol.20 Issue (1): 157-171 DOI: 10.31083/j.jin.2021.01.334) we provide an account of the anatomical subdivisions, the connections and the function of the macaque SPL and highlight similarities with human SPL organization both under normal condition and after SPL lesions. Knowledge of this part of the brain could help understanding abnormal reaching and grasping behavior in humans and also fuel research on brain machine interfaces (BMI) enabling prosthetic arms and hand to perform more smooth and accurate actions In dynamic environments.

This review is particularly focused on the origin and type of visual information reaching the SPL, and on the functional role this information can play in guiding limb interaction with object in structured and dynamic environments. The knowledge of SPL in primates, considering that this structure shares anatomical and functional organization in human and non-human primates, could help in understanding how to guide human neuroprosthetic devices in complex natural contests.