PROJECT

EquAl: Equitable Algorithms, Promoting Fairness and Countering Algorithmic Discrimination Through Norms and Technologies.

The EquAl project addresses algorithmic evaluations, decisions, and predictions, to promote fairness and counter discrimination affecting individuals and groups.The research project fundedis by the EU Commission under the NextGenerationEU program and the Italian Ministry of Education, University and Research. (PRIN 2022. Ref. prot. n.: 2022KFLF3E-001 - CUP J53D23005560001)

EquAl aims:

- To provide an understanding of the concepts of algorithmic unfairness and discrimination, bridging the notions adopted in social sciences, law, statistics, and artificial intelligence.

- To identify the ways in which algorithmic unfairness originates and spreads in different social contexts, affecting individuals and groups, and particularly to identify the cases in which algorithmic unfairness leads to prohibited discrimination.

- To analyse the ways in which the law currently addresses algorithmic discrimination and propose appropriate measures to implement or upgrade the existing regulatory framework.

- To examine the way in which technologies can promote fairness and support detecting and countering algorithmic unfairness and discrimination, in particular with regard to the assessment of asylum requests.

By identifying and remedying algorithmic unfairness and discrimination, EquAl will contribute to preventing and mitigating harms to individuals and groups and favour the law-abiding deployment of AI.

EquAl is premised on the fast-growing application of AI techniques for the purposes of prediction, evaluation, and decision making.

Algorithmic approaches have the potential to transform many aspects of the economic and social life, delivering cost effective solutions, increasing the equity, efficiency, controllability and precision of decision-making processes. However, they may also lead to new and more subtle, opaque, and resilient forms of unfairness and discrimination. Some discriminatory effects have been already addressed by case-law in Europe and beyond, and some proposals exist to regulate aspects of automated decision-making, but no comprehensive regulatory framework exists yet.

EquAl aims to place Italian legal research at the forefront in the domain of algorithmic fairness and non-discrimination, by: (a) delivering new insights on the specific nature, functioning, and evolution of fair and unfair instances of algorithmic decision-making; (b) evaluating existing anti-discrimination technologies and developing new methods to detect instances of unfairness in human and automated decisions and protect vulnerable individuals; (c) providing ethical and legal guidance and (d) supporting public bodies, NGOs and local communities, in particular, in the examination of asylum applications. EquAl’s contribution is crucial to enhance interdisciplinary cross-fertilisation, since currently different criteria and terminologies are used in debating algorithmic fairness and non-discrimination by different research communities (legal scholars, sociologists, computer scientists, statisticians), and to ensure that the corpus of EU and Italian anti-discrimination law, regulations, and case-law can be effectively applied in the algorithmic domain.

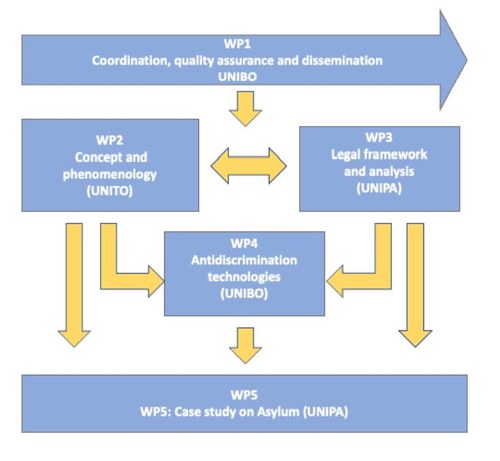

PERT chart of the project development.